- with Senior Company Executives, HR and Finance and Tax Executives

- with readers working within the Business & Consumer Services, Insurance and Technology industries

Introduction

On July 4, 2025, Congress passed the "One Big Beautiful Bill," which failed to include a proposed 10-year moratorium on state laws regulating Artificial Intelligence (AI). In the absence of any U.S. AI federal law, states are rushing to regulate the development and use of AI across industry sectors on an ad hoc basis. Meanwhile, companies that are embracing AI in their day-to-day business operations are understandably confused about AI regulation, compliance. and potential liability exposure. This article highlights some of the landmark state AI legislation enacted to date.

White House Executive Order on AI

On December 11, 2025, President Trump signed an Executive Order "Ensuring a National Policy Framework for Artificial Intelligence," with the stated policy "to sustain and enhance the United States' global dominance through a minimally burdensome national policy framework for AI." The order mandates the creation of an AI Litigation Task Force "whose sole responsibility shall be to challenge State AI laws" inconsistent with this policy. Meanwhile, states are proposing and passing AI legislation at record speed in the absence of any overriding AI federal law.

Colorado Artificial Intelligence Act

To date, Colorado has enacted the most comprehensive and controversial AI law in the United States. Enacted in May 2024, the law is currently scheduled to go into effect on June 30, 2026. The Colorado Artificial Intelligence Act applies to both "developers" and "deployers" of AI systems that do business in Colorado and is intended to protect Colorado residents. The stated purpose of the law is to prevent "algorithmic discrimination" by AI systems that results in unlawful differential treatment and adversely impacts persons on the basis of a protected class such as age, color, disability, ethnicity, national origin, race, and sex, among others. The law specifically targets AI systems used to make "consequential decisions" with respect to an individual's access to education, employment, loans and financial services, insurance, healthcare, housing, and legal services.

The Colorado AI Act requires companies to develop and implement an AI risk management policy and program, including an AI impact assessment to identify, document, and mitigate known or foreseeable risks of algorithmic discrimination. Companies are required to disclose the use of high-risk AI systems to consumers, in addition to the basis for any consequential adverse decisions from the use of such systems which can be appealed for human review. Moreover, companies must provide notice on their website disclosing the type of high-risk AI systems used, how the company manages risk of algorithmic discrimination, and the nature and source of information collected and used by the AI system. [Note: These requirements do not apply to employers with less than 50 full-time employees, don't use their own data to train the AI system, and make available any impact assessment completed by the developer of the AI system.] The law is enforceable by the Colorado Attorney General.

Utah Artificial Intelligence Policy Act

The Utah Artificial Intelligence Policy Act was passed on May 1, 2024, and has an automatic sunset provision of July 2027. The Utah law is the first to specifically focus on the transparency and use of generative AI (GenAI), which became mainstream with the launch of ChatGPT in November 2022. Utah law mandates the disclosure that a person is interacting with GenAI as opposed to a human. The law applies to a "supplier" engaged in a consumer transaction, or a person or entity in a "regulated occupation" that requires a Utah State license or certificate and engages in a "high-risk" interaction with GenAI systems. The latter refers to an interaction that either involves the collection of sensitive personal information (such as health, biometric, or financial data), or provides financial, legal, medical, or mental health advice or services that could be reasonably relied upon by an individual to make a significant personal decision.

Utah also passed a bill effective May 7, 2025, with respect to the regulation of mental health chatbots. The law applies to interactive communications that mimic conversations between an individual and a licensed mental health therapist. The law mandates the disclosure and identification of a chatbot as AI and not a human. A supplier of a mental health chatbot may not sell or share any individually identifiable health information of a Utah user with any third party. Moreover, a supplier may not use a mental health chatbot to advertise a specific product or service unless the chatbot clearly and conspicuously identifies it as an advertisement, promotion, or sponsor. The law is enforceable by the Utah Attorney General with fines and penalties of up to $2,500 per violation.

Texas Responsible Artificial Intelligence Governance Act

The Texas Responsible Artificial Intelligence Governance Act (TRAIGA) was passed in June 2025, takes effect on January 1, 2026, and was designed to protect Texas consumers. TRAIGA applies to both developers and deployers of AI systems in the State of Texas. The law prohibits the development and deployment (use) of AI systems that intentionally (1) encourage or incite physical harm or criminal activity; (2) infringe, restrict, or otherwise impair an individual's constitutional rights; (3) unlawfully discriminate against a protected class (including race, color, national origin, sex, age, religion, or disability) in violation of state or federal law; or (4) produce or distribute certain sexually explicit content and child pornography, including the use of deep fake videos and texts describing sexual conduct while imitating minors under the age of 18. The law also requires full disclosure to consumers that they are interacting with AI. Such disclosure must be clear and conspicuous, in plain language, and may be provided using a hyperlink directing a consumer to a page on the company's website. The Texas Attorney General has the exclusive authority to enforce violations of the Act and may impose fines and penalties of up to $12,000 per violation (for curable offenses) and up to $200,000 per violation (for non-curable offenses).

California Automated Decision-Making Technology Regulations

On September 23, 2025, the long-anticipated regulations under the California Consumer Privacy Act (CCPA) regarding the use of automated decision-making technology (ADMT Regs) were issued and go into effect on January 1, 2027. The regulations define ADMT as "any technology that processes personal information and uses computation to replace human decision-making or substantially replace human decision-making." As a practical matter, AI is an ADMT technology that relies on machine learning, data analytics, and algorithms to make automated decisions with little to no human involvement. The ADMT Regs generally apply to for-profit businesses that conduct business in that State of California and are otherwise subject to the CCPA thresholds, including businesses that (1) have an annual gross revenue in excess of $25 million (adjusted for inflation); (2) buy, receive, sell, or share personal information of 100,000 or more California residents or households; or (3) derive 50% or more of their revenue from the sale or sharing of personal information.

The ADMT Regs set forth consumer notice requirements for companies that use ADMT to make a "significant decision" concerning a California consumer. This is defined as a "decision that results in the provision or denial of financial or lending services, housing, education enrollment or opportunities, employment or independent contracting opportunities or compensation, or healthcare services." Companies that use ADMT for such decisions must provide consumers with a "pre-use notice" that includes the following information: (i) advise consumers about the use of ADMT; (ii) the consumer's right to "opt-out" of ADMT; and (iii) the consumer's right to "access" ADMT. The business must acknowledge receipt of consumer requests within 10 days and respond to the requests within 45 days. The pre-use notice must set forth the specific purpose for which the business plans to use ADMT, how the ADMT processes personal information to make a significant decision, the categories of personal information that are analyzed by the ADMT, the type of output generated by the ADMT, and how a decision would be made if a consumer opts out. The notice must be presented prominently and conspicuously to the consumer at or before the time the business collects the consumer's personal information.

Businesses that use ADMT for a significant decision concerning consumers are required to conduct a risk assessment. This assessment requirement also applies to businesses that process the personal information of consumers which they intend to use to train an ADMT for a significant decision concerning a consumer. The overall purpose of the risk assessment is to determine whether the risks to consumers' privacy from the processing of their personal information outweighs the benefits. Specifically, with respect to the use of ADMT, the risk assessment must address the following: the logic of the ADMT (including any assumptions or limitations of the logic); the output of the ADMT and how the business will use the output to make a significant decision; and policies, procedures, and training to ensure that the business's ADMT works for the stated purpose and does not unlawfully discriminate based upon protected characteristics. A business must conduct and document a risk assessment prior to using ADMT, at least once every 3 years thereafter, and maintain the risk assessment for 5 years. Additionally, a business must submit an attestation to the California Privacy Protection Agency (CPPA) that it conducted a risk assessment in accordance with the ADMT Regs.

AI Governance and Risk Management

As companies race to embrace the competitive advantages and operational efficiencies offered by the strategic development and deployment of AI tools in the face of a tumultuous legislative landscape, it is increasingly imperative that they develop robust AI governance and risk management practices.

As noted by the National Institute of Standards and Technology (NIST), the "risks posed by AI systems are in many ways unique," and "AI risk management is a key component of responsible development and use of AI systems." To that end, NIST created a comprehensive Artificial Intelligence Risk Management Framework (AI RMF) in January 2023, designed to assist organizations in minimizing the potential negative impact of AI systems. The NIST AI RMF is intended to be voluntary and non-sector specific to provide greater flexibility to organizations that adopt all or part of the framework. The four core functions addressed by the framework include (1) Govern, (2) Map, (3) Measure, and (4) Manage AI systems and risks. An organization should document its AI program, policies, and procedures, which should be routinely reviewed and updated.

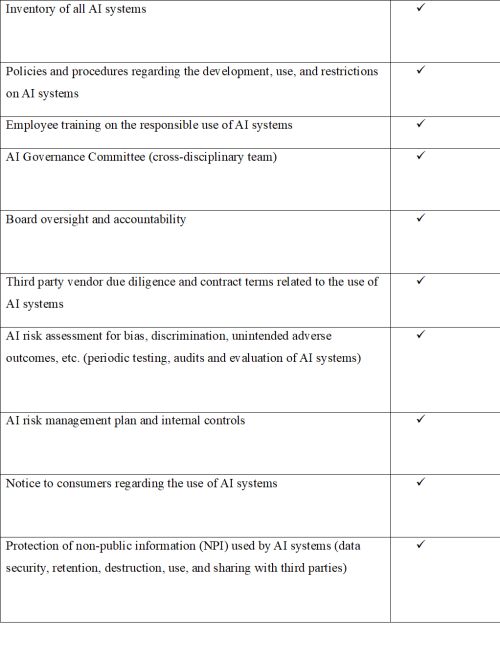

Factors for Companies to Consider as They Develop Their AI Governance Programs

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.

[View Source]