- within Technology topic(s)

- in United States

- within Government, Public Sector and Employment and HR topic(s)

- with readers working within the Securities & Investment industries

In 2024, Regulation (EU) 2024/1689 on "laying down harmonised rules on artificial intelligence", or more commonly known as the AI Act, entered into force with the aim of ensuring responsible AI development and use in the EU.

The objective of this Act is to develop a strong regulatory frame work that benefits all by;

- Setting legal requirements for developers and AI providers;

- Classifying AI systems;

- Minimal risk-Spam filters which are basically unregulated, but companies are free to create rules for themselves;

- Limited risk-Chats which must disclose that AI is being used;

- High risk-AI used in sensitive areas like hiring,educationandhealthcare;

- Unacceptable risk-Banned due to violating human rights such as China's social scoring system which monitors the public's behaviour virtually removing all boundaries and privacy.

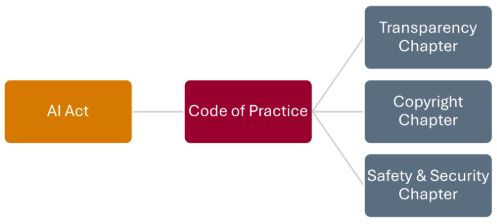

Article 50 of the Acten visioned the creation of a voluntary Code of Practice to provide practical guidance on implementing its principles. In July 2025, the European Commission published the General-Purpose AI Code of Practice(Code), which was reviewed and endorsed by the European AI Board. This Code is made up of three chapters and targets general-purpose artificial intelligence(GPAI), AI which is designed to perform a wide range of tasks like ChatGPT and Mid Journey, amongst others.

The Transparency and Copyright Chapters may be used by all GPAI providers, but the Safety and Security Chapter need only apply to GPAI models that have a systemic risk. This means they may pose a threat to public health and safety or to the rights of society at large, however, only a few of the most advanced models need to subject themselves to this Chapter.

Their aim is to strengthen the functioning of the internal market and protect fundamental rights from potential negative impacts of AI within the Union, whilst also fostering innovation in line with Article 1(1)of the AI Act.

To achieve this, the Codes have 2 specific objectives:

- To offer guidance to complying with Articles 53 and 55, while understanding that following the Code alone does not guarantee compliance.

- To aid GPAI providers meet their legal duties under the AI Act and allow the AI Office assess compliance.

The Transparency Chapter

Main Aim: Documentation

Signatories, providers who formally endorsed and agreed to abide by the Code, commit to fulfilling the transparency and documentation obligations under Article 53(1)(a) and (b)of the EU AI Act by;

- Creating and maintaining detailed documentation of the GPAI models;

- Sharing relevant information with downstream providers, developers that use or build on GPAI models created by another company, and the AI Office when requested;

- Ensuring that all documentation is accurate and properly managed.

This commitment is maintained by first preparing such documentation before the GPAI is even put on the market. The EU published a standardised form that maybe used as guidance. By keeping old versions of this document and keeping it up to date, transparency and accountability is upheld. Once this information is prepared, providers must have a channel of communication through which downstream providers and the AI Office may request access. Upon receiving such a request, providers must share the relevant parts of documentation within the requiredtimeframes,generally14days.

The Copyright Chapter

This Code is beneficial as the AI Act obliges GPAI providers to establish a policy in line with the Union's law on copyright. As a result o Article 105and the recognition that GPAI models use large quantities of potentially copyrighted content, providers must adopt a formal, documented copyright-compliance policy. Thus, copyright protected content may only be used once authorisation by the rightsholder is granted, assuming no copyright restriction applies as introduced by Directive (EU) 2019/790. MainAim: Policy

Signatories commit to the Copyright Code and Directives by;

- Making th epolicy publicly available;

- Only using lawfully accessible works and not by passing technical protections designed to prevent unauthorised access;

- Honouring signals, and respecting reservations, from content creators that their work is not to be used by AI, even if their work is publicly available;

- Prevent their AI models, and systems built upon them, from producing outputs which infringe copyright;

- Ensure a point of contact whereby affected rightsholders may issue a complaint electronically.

Safety and Security Chapter

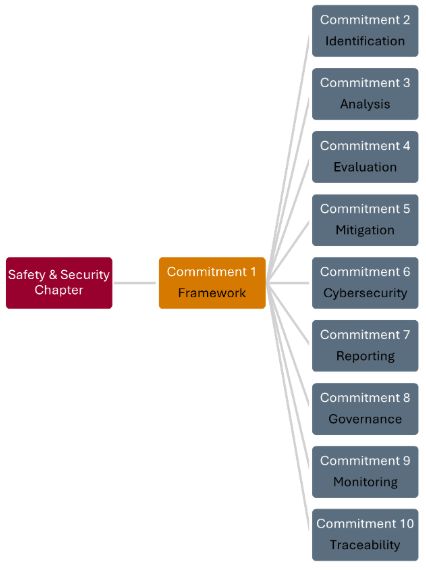

This chapter outlines 10 suggestions, called Commitments, designed to guide providers throughout the lifecycle of models, even years after placed on the market. The first Commitment outlines the essential elements that must be included in the model documentation while the remaining commitments build on this foundation by giving providers an indication of the proper way of compiling, structuring and maintaining this information.

Commitment 1

This sets out a Safety and Security Framework(SSF) which clearly documents the processes and measures used to reduce risk in a structured and systematic way.

Signatories to this Chapter must;

- Create the Framework: Contains a detailed overview of all planning and implementation procedures, a definition and justification of what is considered an acceptable risk, therolesand responsibilities for managing that risk and the process for updating the Framework.

- Implementation: Continuously monitor and assess the model through evaluations, post-market screening, and review of serious incidents. Conducting a full systemic risk assessment before market release and apply additional mitigations if risks are unacceptable.

- Updating the Framework: Review and update the Framework at least annually, or sooner if developments or serious incidents occur, ensuring it remains effective and up to date.

As provided in Article 52(1) AI Act, providers of high-risk AI models must notify the Commission at least2 weeks before placing the model on the market by submitting their SSF and risk assessments. The commission then has up to 4 weeks to confirm that the Framework meets the legal requirements.

Commitment 2

Providers must identify both current and potential systemic risks from the model to prevent harm. This is done by reviewing internal processes, examining external sources, comparing similar models, and using any relevant information provided by the AI Office.

Commitment 3

The Signatory must keep analysing data to assess whether a risk is acceptable or not. This information may be collected using;

- Gathering model-independent information including web searches, literature reviews, interviews and feedback;

- Actual testing like Q&As are necessary to determine the likelihood of a systemic risk occurring;

- Post market monitoring to detect new risks, confirm prior risk assessments, whilst keeping the Framework up to date.

Commitment 4

All systemic risks identified and analysed must be evaluated against objective criteria to determine if they are acceptable for market release. Signatories must describe and justify each risk by indicating its probability of occurring and the potential impact.

Commitment 5

Effective safety measures must be maintained continuously, and precautions must be in place in case of hostile attempts, such as jailbreaking. The Code provides examples of safety mitigations including date filtering, monitoring inputs and outputs, and transparency tools.

Commitment 6

An adequate level of cybersecurity is necessary to prevent unauthorised accessor data leaks. Since these models are already risky in nature, Signatories must protect and prevent further risk. A model is exempt from this requirement only if it can demonstrate that its capabilities are inferior that those of another model whose parameters are publicly available.

Commitment 7

Before placing a model on the market, a Safety and Security Model Report must be submitted to the AI Office. The report must describe the model's capabilities, uses, and specifications, including topics it may refuse, and explain why each risk was deemed acceptable and under what conditions it would no longer be. To satisfy this Commitment, providers must consolidate all information from previous Commitments in a single document, keep it updated and submitit to the AI Office.

Commitment 8

Organisational accountability is essential by establishing internal structures, defined responsibilities and appropriate allocation of human, financial and computational resources. This formalises responsibilities rather than leaving them theoretical.

Commitment 9

Signatories must have methods to identify, track and captures serious incidents and near misses through monitoring of online forums, police reports and research papers. These incidents must be reported to the AI Office, and where applicable, national authorities within 2 to 15 days. Reports should include when and where the incident occurred and the resulting harm. All documents must be retained for 5years.

Commitment 10

This focuses on long term documentation, transparency and trace ability for providers of GPAI models with systemic risk. Providers must maintain complete, accessible records of their model architecture, method of integration, safety evaluations and mitigation decisions. The idea is that the regulator (AI Office) or downstream stake holders can verify, audit or challenge the provider's practices even years after deployment.

Together, these commitments ensure that providers not only implement comprehensive technical safeguards but also establish clear accountability, maintain regulatory compliance, and encourage safety and responsibility.

Overall, the General-Purpose AI Code of Practice serves as an important framework for ensuring that providers of GPAI models operate responsibly, transparently, and in line with the requirements of the EU AI Act. By establishing clear expectations foe documentation, transparency and copyright compliance, AI providers can be held accountable.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.