- within Food, Drugs, Healthcare and Life Sciences topic(s)

- in United States

- with readers working within the Healthcare industries

- with readers working within the Property industries

- within Immigration topic(s)

Purpose: The purpose of this tracker is to identify key federal and state health AI policy activity and summarize laws relevant to the use of AI in health care. The below reflects activity from January 1, 2025 through June 30, 2025. This is published on a quarterly basis.

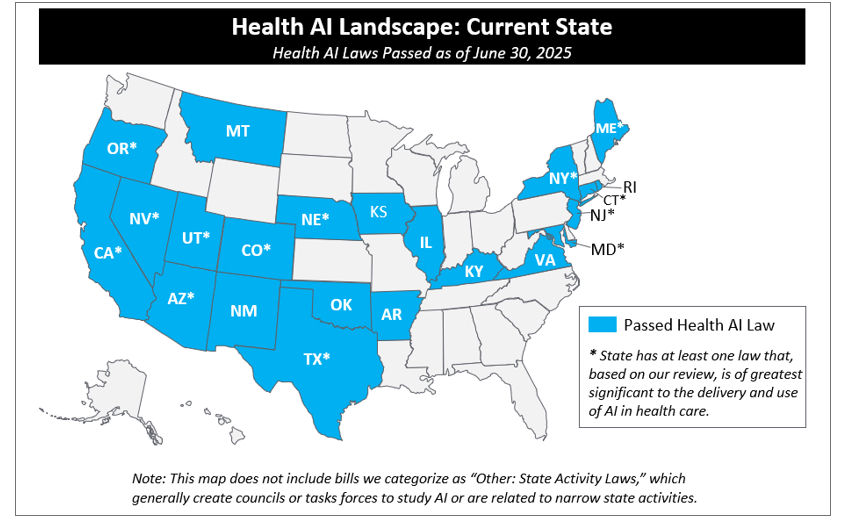

Activity on AI in health care has been a nationwide phenomenon during the 2025 legislative session: as of June 30, 2025, 46 states have introduced over 250 AI bills impacting health care and 17 states passed 27 of those bills into law.

See map below.1

This year, so far, passed laws have primarily focused on three key areas:

1. Use of AI-Enabled Chatbots: Actors across the health care ecosystem are integrating AI chatbots to improve efficiency, enhance patient engagement, and expand access to care. Chatbot functionalities are being leveraged in administrative functions (e.g., in support of patient scheduling) and in clinical functions (e.g., early patient triage). States are taking action to legislate these tools in response to concerns that AI chatbots may misrepresent themselves as humans, produce harmful or inaccurate responses, or not reliably detect crises.

Notably, five bills have been passed, and four signed into law,2 related to AI-enabled chatbots this year. Of those, two directly address the use of chatbots in the delivery of mental health services:

- Utah's HB 452, effective as of 5/7/2025, requires "mental health chatbots" to clearly and conspicuously disclose to the user that the chatbot is AI technology (and not a human) at the beginning of any interaction, before the user accesses features of the chatbot, and any time the user asks or otherwise prompts the chatbot about whether AI is being used. The law prohibits the sale or sharing of individual user data from mental health chatbots with any third party (with key clinical exceptions3 ) or advertising a specific product or service during the conversation unless the chatbot clearly and conspicuously identifies the advertisement and discloses any sponsorships, business affiliations, or agreements that the supplier has with third parties to promote the product or service. Separately, Utah's Office of Artificial Intelligence Policy has recently published best practices for use of AI by mental health therapists.

- New York's enacted budget (SB 3008), effective beginning 11/5/2025, is more broadly applicable to "AI companions."4 The law prohibits any person or entity to operate or provide an "AI companion" to someone in New York unless the model contains a protocol to take reasonable effort to detect and address suicidal ideation or expressions of self-harm expressed by the user. That protocol must, at a minimum: (1) detect user expressions of suicidal ideation or self-harm, and (2) refer users to crisis service providers (e.g., suicide prevention and behavioral health crisis hotlines) or other appropriate crisis services, when suicidal ideations or thoughts of self-harm are detected. AI companion operators must provide a "clear and conspicuous" notification—either verbally or in writing—that the user is not communicating with a human; that notification must occur at the beginning of any AI companion interaction, and at least every three hours after for continuous interactions.

Separately but related, Texas HB 149 (effective beginning 9/1/2026) is more broadly applicable to "artificial intelligence systems"5 and specifically prohibits any development or deployment of these systems that would "incite or encourage" a person to commit physical self-harm, cause harm others, or engage in criminal activity. While the law is not specific to chatbots, these provisions may impact AI chatbots deployed in the state. The law includes several other broader provisions (see summary below).

Finally, Illinois HB 1806—which was sent to the Governor on June 24 (although not yet signed)—prohibits the use of AI systems in therapy or psychotherapy to make independent therapeutic decisions, directly interact with clients in any form of therapeutic communication, or generate therapeutic recommendations or treatment plans without the review and approval by a licensed professional. This law, if signed, may substantially impair use of AI systems for mental health services.

The other two laws that passed addressed concerns about misrepresentation of chatbots as humans, as well as data use and sharing: Maine HP 1154, effective as of 6/18/2025, prohibits chatbots that mislead or deceive users into believing they are interacting with a human, and Utah SB 226, effective as of 5/7/2025, requires "regulated occupations"6 to disclose use of AI in messaging in "high-risk artificial intelligence interactions."

A dozen other chatbot bills were introduced but did not pass, which were mainly general chatbot bills (not specific to health care) focused on setting forth disclosure requirements. There were three bills that did not pass that included provisions specific to healthcare chatbots and/or had mental health specific provisions.7 We anticipate further activity in this area next legislative session.

2. Payor Use of AI: Payors are increasingly adopting AI across operations—from utilization and quality management to fraud detection and claims adjudication—with a recent NAIC survey finding that 84% of health insurers use AI or machine learning across their product lines.8 In response, states are focusing on ways to mitigate potential harms to beneficiaries from its use. Of the approximately 60 bills that introduced governing payer use of AI, only four passed. These four laws focus primarily on prohibiting the sole use of AI in making medical necessity denials or denying prior authorization and either requiring review by a physician of all AI decisions or prohibiting payers from replacing physician/peer review of medical appropriateness with an AI tool. For more detail, see below Arizona (HB 2175), Maryland (HB 820), Nebraska (LB 77), and Texas (SB 815).

3. AI in Clinical Care: States are eager to balance AI's potential to support clinical care with the potential risks posed to patients and providers. This year, states have worked to establish guardrails on the use of AI in clinical care, such as provider oversight requirements, transparency mandates, and safeguards against bias and misuse of sensitive health data. Of the over 20 bills regulating provider use of AI introduced in 2025—which included language regarding the role of AI in clinical delivery, what provider oversight should be required when using AI tools in clinical decision-making, and how providers should communicate AI use to patients—four bills passed. Texas HB 149 mandates that providers leveraging AI systems for health care services or treatments provide disclosure to the patient (or their representative) no later than the date that the service or treatment is first provided (with exception for emergency situations, when disclosure must be provided "as soon as reasonably possible"). Laws in Nevada (AB 406) and Oregon (HB 2748) prohibit AI systems from representing themselves as licensed providers; Nevada's bill focused on an AI system representing itself as mental or behavioral health care providers and Oregon's on nurses. Texas SB 1188 requires providers leveraging AI for diagnostic or other purposes to review information before entering it into patient records.

While most 2025 legislative sessions have now ended, six states (CA, MA, MI, OH, PA, and WI) remain in session and are actively progressing legislation. We will continue to track legislation in those states. See below table for a full summary of key health AI laws passed in 2025 and here or a list of all AI laws passed to-date.

In Q2, there was significant federal activity. While ultimately not passed, a near final draft of H.R. 1 ("One Big Beautiful Bill") included language that would have barred state or local governments from enforcing laws or regulations on AI models or systems for up to ten years. More recently, the White House released the "Winning the Race: America's AI Action Plan," which includes a significant deregulatory and geopolitical posture, and frames AI advancement as essential to American vitality, national security, and scientific leadership. The Plan, among other provisions, directs federal agencies to identify and repeal rules that could hinder AI development. Notably, the plan declares that the "Federal government should not allow AI-related Federal funding to be directed towards states with burdensome AI regulations" and that Federal agencies with AI-related discretionary funding consider a state's AI regulatory climate when making funding decisions. Separately, the plan calls on the Federal Communications Commission (FCC) to evaluate whether state AI laws interfere with its authorities to regulate, among other things, communication and broadband-enabled health technologies. To accelerate innovation, the plan proposes the establishment of regulatory sandboxes or AI Centers of Excellence across the country—supported by a variety of federal agencies (FDA, SEC, DOC)—where developers can deploy and test AI tools in real-world settings. In parallel, the Department of Commerce (DOC), through NIST, would lead domain-specific efforts (e.g., health care, energy, and agriculture) to develop national AI standards and evaluate productivity gains from AI in applied settings.

In addition, federal agencies have solicited comment on AI and health care: In May 2025, the Office of National Coordinator (ONC) and Centers for Medicare & Medicaid (CMS) issued a request for information seeking public feedback on digital tools, including AI, that can improve Medicare beneficiary access, improve interoperability, and reduce administrative burden. On June 27, CMS launched a new model titled the Wasteful and Inappropriate Service Reduction (WISeR) Model to partner with technology companies to "improv[e] and expedit[e]" prior authorization process compared to Original Medicare's existing processes and to reduce fraud for several services/products that CMS deemed at high risk of fraud or abuse in selected states. CMS also, in the CY 2026 Proposed Medicare Physician Fee Schedule, requested public comments on appropriate payment strategies for software as a service and AI.

For a summary of substantive federal action to date, see the table below.

Health AI Laws Passed in 2025:

The below table represents the health AI laws that passed in 2025. For a full list of all laws prior to and including 2025, please see here.

* Laws with an asterisk are those we consider "key state laws." These are laws that, based on our review, are of greatest significance to the delivery and use of AI in health care because they are broad in scope and directly touch on how health care is delivered or paid or because they impose significant requirements on those developing or deploying AI for health care use.

|

State |

Summary |

|---|---|

|

Arizona* |

HB 2175 requires that a health care provider individually, exercising independent medical judgment, review claims and prior authorization requests prior to an insurer denying a claim or prior authorization. The law bans the sole use of any other source to deny a claim or prior authorization. Date Enacted: 5/12/2025 Date Effective: 6/30/2026 |

|

Maine* |

HP 1154 prohibits the use of artificial intelligence chatbots or similar technologies in trade and commerce in a manner that may mislead or deceive consumers into believing they are interacting with a human being, unless the consumer is clearly and conspicuously notified that they are not engaging with a human being. Date Enacted: 6/12/2025 Date Effective: 6/18/2025 |

|

Maryland* |

HB 820 requires carriers (including health insurers, dental benefit plans, pharmacy benefit managers that provide utilization review, and any health benefit plans subject to regulation by the state) to ensure that any AI tools used for utilization review base decisions on medical/clinical history, individual circumstances, and clinical information; does not solely leverage group datasets to make decisions; does not "replace the role of a health care provider in the determination process"; does not result in discrimination; is open for inspection/audit; does not directly or indirectly cause harm; and patient data is not used beyond its intended use. The law mandates that AI tools may not "deny, delay or modify health care services." Date Enacted: 5/20/2025 Date Effective: 10/1/2025 |

|

Montana |

HB 178 prohibits the AI use by government entities to "classify a person or group based on behavior, socioeconomic status, or personal characteristics resulting in unlawful discrimination." Requires government entities provide disclosures on any published material posted by AI not reviewed by a human. Date Enacted: 5/5/2025 Date Effective: 10/1/2025 |

|

Nebraska* |

LB 77 establishes that AI algorithms may not be the "sole basis" of a "utilization review agent's" (defined as any person or entity that performs utilization review) decision to "deny, delay, or modify health care services" based whole or in part on medical necessity. The law requires utilization review agents to disclose use of AI in utilization review process to each health care provider in its network, to each enrollee, and on its public website. Date Enacted: 6/4/2025 Date Effective: 1/1/2026 |

|

Nevada* |

AB 406 prohibits AI "providers" from "explicitly or implicitly" indicating that an AI system is capable of providing or is providing professional mental or behavioral health care. Prohibits providers of mental and behavioral health care from using or providing AI systems in connection to the direct provision of care to patients. Sets forth that providers may use AI tools to support administrative tasks provided that the provider must 1) ensure that use complies with all applicable federal and state laws governing patient privacy and security of EHRs, health-related information, and other data, including HIPAA, and 2) review the accuracy of any report, data, or information compiled, summarized, analyzed, or generated by AI systems. The law requires the state agency to develop public education material focusing on, amongst other topics, best practices for AI use by individuals seeking mental or behavioral health care or experiencing a mental or behavioral health event. Additionally, the law prohibits all public schools (including charter schools or university schools) from using AI to "perform the functions and duties of a school counselor, school psychologist, or school social worker" as related to student mental health. Date Enacted: 6/5/2025 Date Effective: Upon passage and approval for the purpose of adopting any regulations and performing any other necessary preparatory administrative tasks to carry out provisions of this act. 7/1/2025 for all other purposes. |

|

New Mexico |

HB 178 establishes that the Board of Nursing shall "promulgate rules establishing standards for the use of artificial intelligence in nursing." Date Enacted: 4/8/2025 Date Effective: 6/20/2025 |

|

New York* |

SB 3008 prohibits any person or entity to operate or provide an "AI companion" to someone in New York unless the model contains a protocol to take reasonable effort to detect and address suicidal ideation or expressions of self-harm expressed by the user. Requires protocols to, at a minimum: (1) detect user expressions of suicidal ideation or self-harm, and (2) refer users to crisis service providers (e.g., suicide prevention and behavioral health crisis hotlines) or other appropriate crisis services, when suicidal ideations or thoughts of self-harm are detected. Requires that AI companion operators provide a "clear and conspicuous" notification—either verbally or in writing—that the user is not communicating with a human; that notification must occur at the beginning of any AI companion interaction, and at least every three hours after for continuous interactions. Sets forth that the Attorney General has oversight authority and can impose penalties of $15,000/day on an operator that violates the law. Date Enacted: 5/9/2025 Date Effective: 11/5/2025 |

|

Oregon* |

HB 2748 mandates that "nonhuman" entities, including AI tools, may not use the title of nurse or similar titles, including advanced practice registered nurse, certified registered nurse anesthetist, clinical nurse specialist, nurse practitioner, medication aide, certified medication aide, nursing aide, nursing assistant, or certified nursing assistant. Date Enacted: 6/24/2025 Date Effective: 1/1/2026 |

|

Texas* |

HB 149 sets requirements for government agency and non-governmental use of AI. Requirements for government agencies include: mandating that government agencies using AI systems that interact with consumers clearly and conspicuously disclose to each consumer, before or at the time of interaction, that the consumer is interacting with an AI system; prohibiting government entities from using AI systems that produce social scoring, or developing or deploying an AI system that uses biometric identifiers to uniquely identify individuals if that use infringes on constitutional rights; and establishing an AI Regulatory Sandbox Program and creates the "Texas Artificial Intelligence Council." Requirements for non-governmental developers and deployers of AI include: prohibiting deployers from deploying AI systems that aim to "incite or encourage" a user to commit self-harm, harm another person, or engage in criminal activity and prohibiting development or deployment of AI systems that discriminate. An AI system deployed in relation to health care services or treatments must be disclosed by the provider to the recipient of health services or their personal representative on the date of service, except in emergencies, when the provider shall disclose as soon as reasonably possible. Date Enacted: 6/22/2025 Date Effective: 1/1/2026 |

|

Texas* |

SB 815 prohibits a utilization review agent's use of an automated decision system (defined as an algorithm or AI that makes, recommends, or suggests certain determinations) to "make, wholly or partly, an adverse determination." Adverse determinations are defined as determinations that services are not medical necessary or appropriate, or are experimental or investigational. Sets forth that the use of algorithms, AI, or automated decision systems for administrative support or fraud detection is allowable. Empowers the Commissioner of Insurance to audit and inspect use of tools. Date Enacted: 6/20/2025 Date Effective: 9/1/2025 |

|

Texas* |

SB 1188 requires providers leveraging AI for diagnostic or other purposes to "review all information created with artificial intelligence in a manner that is consistent with medical records standards developed by the Texas Medical Board." In addition, a provider using AI for diagnostic purposes must disclose the use of the technology to their patients. Date Enacted: 6/20/2025 Date Effective: 9/1/2025 |

|

Texas |

HB 3512 requires the Department of Information Resources to implement state-certified AI training programs (similar to existing cybersecurity training protocols) for agency staff and local governments. Date Enacted: 6/20/2025 Date Effective: 9/1/2025 |

|

Utah* |

SB 226 repealed Utah SB 149 disclosure provisions and replaced them with disclosure requirements that are similar, but required in more narrow scenarios. As with SB 149, the law requires "regulated occupations" to prominently disclose that they are using computer-driven responses before they begin using generative AI for any oral or electronic messaging with an end user. However, this disclosure is only required when the generative AI is "high-risk," which is defined as (a) the collection of personal information, including health, financial, or biometric data and (b) the provision of personalized recommendations that could be relied upon to make significant personal determinations, including medical, legal, financial, or mental health advice or services. Relatedly, in 2025, SB 332 passed, which extended the repeal date of SB 149 to July 1, 2027. Date Enacted: 3/27/2025 Date Effective: 5/7/2025 |

|

Utah* |

HB 452 requires suppliers of "mental health chatbots"9 to clearly and conspicuously disclose that the chatbot is AI technology and not a human at the beginning of any interaction, before the user access features of the chatbot and any time the user asks or otherwise prompts the chatbot about whether AI is being used. Prohibits "suppliers"10 of mental health chatbots from:

The law does not preclude chatbots from recommending that users seek counseling, therapy, or other assistance, as necessary. The Attorney General may impose penalties for violations of this law. Finally, the law states that it is an affirmative defense to liability under the law if the supplier demonstrates that they maintained documentation that describes development and implementation of the AI model that complies with the law and maintains a policy that meets a long list of requirements, including ensuring that a licensed mental health therapist was involved in the development and review process and has procedures which prioritize user mental health and safety over engagement metrics or profit. In order for the affirmative defense to be available, the policy must be filed with the Division of Consumer Protection. Date Enacted: 3/25/2025 Date Effective: 5/7/2025 |

|

Other: State Activity Laws |

Over the past several decades, states have sought to understand AI technology before regulating it. For example, states have created councils to study AI and/or created AI-policy positions within government in charge of establishing AI governance and policy. States have additionally tracked use of AI technology within state agencies. These bills reflect states interest in the potential role of AI across industries, and potentially in health care specifically. The following passed in 2025: Alabama HB 365, Kentucky SB 4, Maryland SB 705, Maryland HB 956, Mississippi SB 2426, Montana HJR 178, New York SB 822, Texas HB 149 (certain provisions), Texas SB 1964, Texas HB 3512, and West Virginia HB 3187. |

Key Federal Activity

|

2025 Activity To-Date |

|

|---|---|

|

White House |

|

|

Congress |

Initial drafts of H.R. 1 included a ten-year moratorium on state legislation of AI. After much debate, this provision was struck from final law on July 4, 2025. Bills to:

Several others that touch on AI in health care, which we will report on if they gain traction. |

|

HHS Appointments and Announcements |

|

|

OCR |

|

|

ONC |

|

|

CMS |

|

|

FDA |

|

|

NIH |

|

|

DOJ |

Litigation continues over alleged use of AI to deny Medicare Advantage claims. In June 2025, DOJ announced charges against over 300 defendants for participation in health care fraud schemes, with a parallel announcement from CMS on the successful prevention of $4 billion in payments for false and fraudulent claims. |

|

FTC |

|

Footnotes

1 This map does not include bills we categorize as "Other: State Activity Laws," which generally are bills that create councils or tasks forces to study AI or are related to narrow state activities.

2 Illinois HB 1806 passed and was sent to the Governor on June 24 , but, as of the time of publication has not yet been signed.

3 Exceptions include: if the data is (a) requested by a health care provider with a user's consent; (b) provided to a health plan of a Utah user upon a user's request; or (c) shared by the supplier to ensure the effective functionality of the tool, provided that the supplier and the recipient of such information comply with HIPAA (even if not a covered entity or business associate).

4 AI Companions defined as: "a system using artificial intelligence, generative artificial intelligence, and/or emotional recognition algorithms designed to simulate a sustained human or human-like relationship with a user by:

- retaining information on prior interactions or user sessions and user preferences to personalize the interaction and facilitate ongoing engagement with the AI companion;

- asking unprompted or unsolicited emotion-based questions that go beyond a direct response to a user prompt; and

- sustaining an ongoing dialogue concerning matters personal to the user."

AI Companions exclude "any system used by a business entity solely for customer service or to strictly provide users with information about available commercial services or products provided by such entity, customer service account information, or other information strictly related to its customer service; any system that is primarily designed and marketed for providing efficiency improvements or, research or technical assistance; or any system used by a business entity solely for internal purposes or employee productivity."

5 "Artificial Intelligence Systems" are defined as "any machine-based system that, for any explicit or implicit objective, infers from the inputs the system receives how to generate outputs, including content, decisions, predictions, or recommendations, that can influence physical or virtual environments."

6 "Regulated occupations" are defined as occupations regulated by the Department of Commerce that require an individual to obtain a license or state certification to practice the occupation. "High-risk artificial intelligence interactions" are defined as interactions with generative artificial intelligence that involve 1) the collection of sensitive personal information (including health data, financial data, or biometric data); 2) the provision of personalized recommendations, advice, or information that could be reasonably relied upon to make significant personal decisions (including medical advice or services or mental health advice or services, among others), or 3) other applications as defined by division rule.

7 California SB 243, New York AB 6767, and North Carolina SB 624 were all introduced in 2025 and contain provisions focused on AI chatbots and mental health.

8 Health Survey Report - FINAL 5.9.25.pdf

10 "Supplier" means a seller, lessor, assignor, offeror, broker, or other person who regularly solicits, engages in, or enforces consumer transactions, whether or not the person deals directly with the consumer. Utah Code 13-11-3

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.